Why Your PRD Isn’t Working for AI Development

The Specification Pyramid Framework

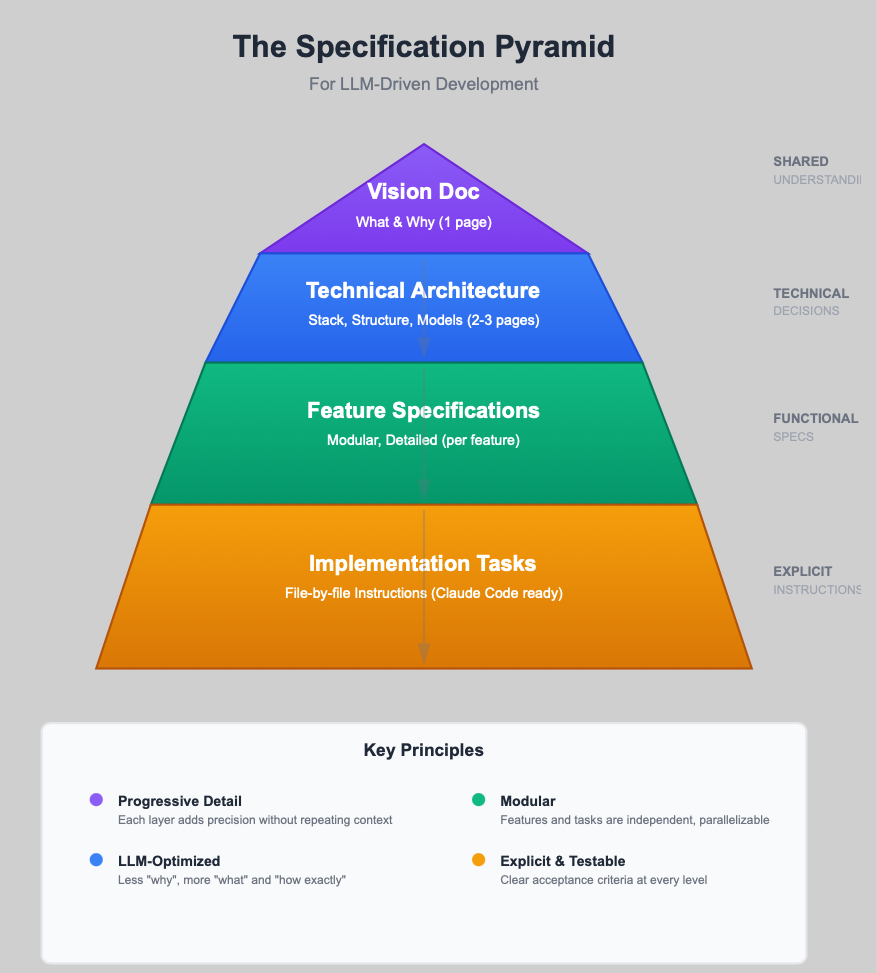

TL;DR: Traditional PRDs don’t work for AI. When I asked Claude how to fix that, it invented the “Specification Pyramid” — a four-layer framework for communicating with AI that makes development faster, clearer, and more precise.

What happens when AI starts inventing its own development methodologies?

I had an idea for an app — multiple AI personas that could debate and collaborate in chat rooms, like assembling your own advisory board of specialized experts.

I’ve been testing different ways of working with AI tools for development: writing detailed Product Requirements Documents, creating feature specs, experimenting with various levels of detail to see what produces the best results from LLMs.

The output wasn’t terrible, but it wasn’t precise enough. The implementations were generic. Details I thought were obvious weren’t getting captured. I was spending too much time clarifying and refining.

The Problem With What I Was Doing

When I wrote requirements like “Create a user-friendly interface for managing personas,” Claude would generate something. It would work. But it wouldn’t match what I had in my head.

Was it Claude’s fault? No. The spec didn’t say “card-based layout with color-coded roles” or “specific Tailwind classes for styling.” It said “user-friendly.”

LLMs are literal. They implement exactly what you specify — no more, no less.

There’s no inferring, no “reading between the lines,” no asking a colleague what you meant in that Slack message yesterday.

The Conversation That Changed Everything

So I did something different. Instead of just feeding Claude requirements and hoping for better results, I asked Claude itself:

“Next step is to build a PRD. Then we can break this up into features that Claude can work on. I need clear instructions for the LLM to produce what I want. Do you have suggestions that might be better than this?”

What came back was eye-opening.

Claude suggested a completely different structure — something it called the Specification Pyramid. Four layers of progressive detail:

1. Vision Doc (1 page)

↓

2. Technical Architecture (2–3 pages)

↓

3. Feature Specs (modular, detailed)

↓

4. Implementation Tasks (Claude Code ready)

I asked if this was a known methodology.

“I just came up with it,” Claude told me. “It’s not a formal methodology or industry standard term. I created it on the spot.”

Claude explained its reasoning:

Traditional PRDs were designed for human developers who:

Understand context from meetings and Slack conversations

Can look at similar apps for reference

Make subjective judgments about what “looks good”

Ask clarifying questions when something’s ambiguous

Have experience that fills in the gaps

LLMs have none of that. They have only what you give them, interpreted literally.

The Specification Pyramid was Claude’s answer to that gap.

It wasn’t a borrowed framework — it was AI itself saying:

“Here’s how you should communicate with AI.”

That moment stopped me.

I wasn’t just using an AI to build an app — I was watching an AI design a better process for itself.

Understanding the Pyramid

Claude explained each layer in detail.

Layer 1: Vision Document (1 page)

What you’re building and why

Core use cases (specific, not generic)

What you’re explicitly not building

Success criteria

Layer 2: Technical Architecture

Exact tech stack and versions

Complete data models and database schemas

File structure

Every interface defined

Layer 3: Feature Specifications (per feature)

Precise UI layouts

Exact styling specifications

All error cases handled

Component breakdowns

Testable acceptance criteria

Dependencies explicitly listed

Layer 4: Implementation Tasks

File-by-file instructions

Specific code to add or modify

Clear definition of “done”

Test verification steps

Dependency mapping for parallel execution

The key insight Claude shared:

“Traditional specs are written for humans who infer context. LLMs need the inverse — maximum precision, minimum fluff.”

Why This Structure Works

Each layer builds on the previous one, adding precision without repeating context.

Vision gives strategic direction — the human “why.”

Architecture eliminates ambiguity — down to versions and schemas.

Feature Specs leave nothing to interpretation — every element, action, and dependency is explicit.

Tasks become atomic and executable — enabling parallel work across agents or developers.

That last point is crucial. The Specification Pyramid isn’t just about clarity — it’s about speed through concurrency.

When dependencies are mapped explicitly, multiple AI agents (or Claude instances) can build in parallel. Traditional PRDs assume linear human coordination. The Pyramid assumes parallel AI execution.

Testing the Framework

I didn’t sit down to write these specs myself — that would’ve defeated the purpose.

Instead, I worked with Claude to generate them. I spent about an hour answering questions about my app — what I wanted to build, who it was for, and what the core features were.

Then I asked:

“Which language, framework, and tech stack would give you the highest success rate in delivering what I want?”

Claude recommended:

React 18.2.0 + TypeScript 5.0.4

Electron 25.3.0

Zustand for state management

SQLite 3.42.0 with SQLCipher

Tailwind CSS 3.3.2

Why this stack? Because Claude has seen thousands of examples with these tools. It knows their patterns, pitfalls, and best practices. It can generate cleaner, more reliable code.

Programming languages don’t matter anymore. What matters is: which tools can the AI implement most reliably.

(More on this in my next post: “Programming Languages Are Dead.”)

From that one-hour Q&A, Claude generated:

A complete Vision Doc

Full Technical Architecture (47 pages)

25 Feature Specifications

Task breakdowns for each feature

I didn’t write the specs. I answered questions. Claude wrote them.

Total time: ~1 hour of collaborative Q&A.

The Result

Then I handed those specs to Claude Code.

What happened next:

Claude Code spent ~6 hours writing code

Built the base features of the app

The app worked on the first try

No debugging.

No “wait, this isn’t what I meant.”

No refactoring.

The UI looked right. The database was solid. Error handling worked. Personas behaved as expected.

Total time:

1 hour: Collaborative spec creation

6 hours: Claude Code implementation

2 hours: Testing and tweaks

→ 9 hours total from idea to working app

Compared to traditional development:

Specs: 8–10 hours

Implementation: 20–40 hours

Debugging: 10–20 hours

→ 38–70 hours total

Or my previous AI attempts without the Pyramid:

Vague requirements: 2 hours

Multiple Claude tries: 15+ hours

Back-and-forth clarifications: 12+ hours

Result: frustration

This time? Smooth from start to finish.

What This Demonstrates

The power isn’t just in the structure — it’s in the collaboration.

AI can now help you spec for AI.

Claude knows the detail it needs, and can extract it through dialogue.

Being technical still matters — you need to review and validate what AI produces — but your focus shifts from typing code to directing precision.

The takeaway:

You don’t need perfect specs — AI can generate them from conversation.

You don’t need to know every tech stack — AI will tell you which it knows best.

You don’t have to implement everything — AI handles that.

You do need a clear product vision.

And you still need judgment.

This isn’t “anyone can build software.” Not yet.

But it’s much closer than before.

The Parallel Execution Advantage

Because dependencies are explicit, you can see at a glance:

Which features can be built independently

Which depend on others

Which tasks within a feature can run in parallel

That means:

Multiple Claude Code instances can work simultaneously

Agents can collaborate instead of queueing

Development speed scales with precision

The Specification Pyramid assumes concurrency — not human-style sequential workflows.

When Claude created it, it was thinking about how AI agents could work together most efficiently.

That’s the future of dev methodology: frameworks designed by AI, for AI.

Try It Yourself

You don’t have to adopt the whole framework at once. Start small:

Write a one-page Vision Doc — what you’re building, who it’s for, what’s in or out of scope.

Define real data models — interfaces, types, relationships.

Fully spec one feature — layout, styling, errors, acceptance criteria.

Hand that to your AI tool and see what happens.

Or better yet — let AI help you write the specs.

Have a conversation. Answer its questions. Watch it generate the documentation.

My bet: your first build will work.

Final Thought

Claude didn’t just build my app.

It created a better process for building itself.

That’s not automation.

That’s co-evolution — humans providing direction, AI creating precision.

The Specification Pyramid is one bridge between the two.

Maybe not the only one, but right now, it’s working.

I’ve been building software for decades, and I’ve never seen something like this.

Watching an AI invent a framework to build itself — that’s not just technical progress.

That’s a new era of collaboration.

Next post: “Programming Languages Are Dead” — Why the tools you know don’t matter anymore, and what actually does.

Building something with AI? Want to compare notes on what’s working? Reply to this email — I read every one.